Shadow Talking is an interactive installation where space is made sound using, both live and pre-recorded, shortwave radio transmissions from numbers stations. This project was created during COVID-19 lockdown and was installed in my living room.

Numbers stations are shortwave radio transmissions from intelligence agencies to communicate with their agents in the field. The messages of numbers and letters are made using either automated voice, Morse code, or a digital mode and are encrypted with a one-time pad. Only the person who has a copy of the one-time pad would be able to decode the message. Prolific in the Cold War era, many of these mysterious stations are still broadcasting today.

Concept & Background Research

This project is a reimagining of Susan Hiller’s, Witness 2000. The piece is situated in a dark room with a about 400 exposed speakers hanging from the ceiling. The speakers play the recordings of Witnesses from around the world describing their experience of encountering UFOs. Witness brings many individual stories together to weave them into an entwined tapestry of narrative. The common thread holding everything together is the subject matter (UFO encounters) and their need for belief in the paranormal and something otherworldly.

Susan Hiller’s work is often themed around “unearthing the forgotten or repressed… her work explores collective experiences of subconscious and unconscious thought and paranormal activity”.¹ I wanted to reimagine Hiller’s theme of the forgotten or repressed with Numbers Stations, the sonification of space, and collecting individual sources together to create a unified experience as an alternative and creative method of storytelling.

Interaction

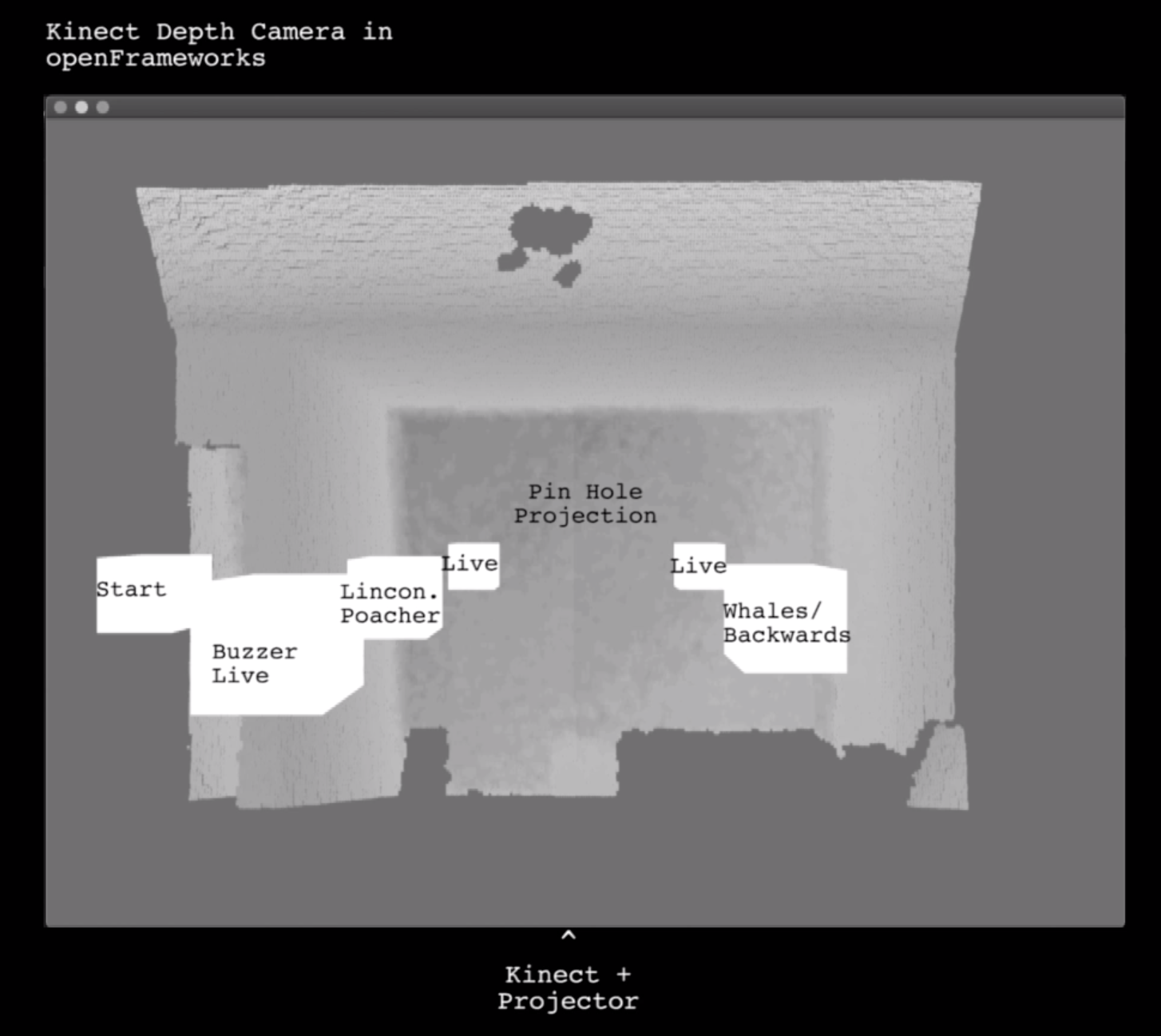

Before entering the room the agent is presented with an explanation of Numbers Stations – as described above. The agent wanders around the dark room listening to radio static. They try to tune in to the encoded radio transmissions by moving their body through the space. Low light from a pinhole is projected onto a wall. When the agent locates a station the transmission plays and a corresponding image is projected through the pin hole.

I want to expose these forgotten relics to pique the participants/agents interest and create a playful and eerie experience that lends to the oddness of the sounds and the theme of espionage. It was important that the interaction response time is sharp – the transmissions start playing loudly and immediately when the agent enters the box to startle them as they sneak around in the dark. The darkness also plays with the idea of the visibility or accessibility of these radio transmissions and their mysteriousness. They’re accessible but their meaning is unattainable – hence the stumbling around in the dark.

The human computer interaction of the project also blurs the boundaries between the physical and virtual space by mapping the physical with virtual. The participant will be walking through a space that is already pregnant with sound. They’ll discover where different stations are located in the physical+virtual space and become entangled in the embodied experience of mixed reality. This might not feel obvious because we’re so used to the omnipresence and ubiquitousness of technology surrounding us but I’m interested in exploring these boundaries.

Software

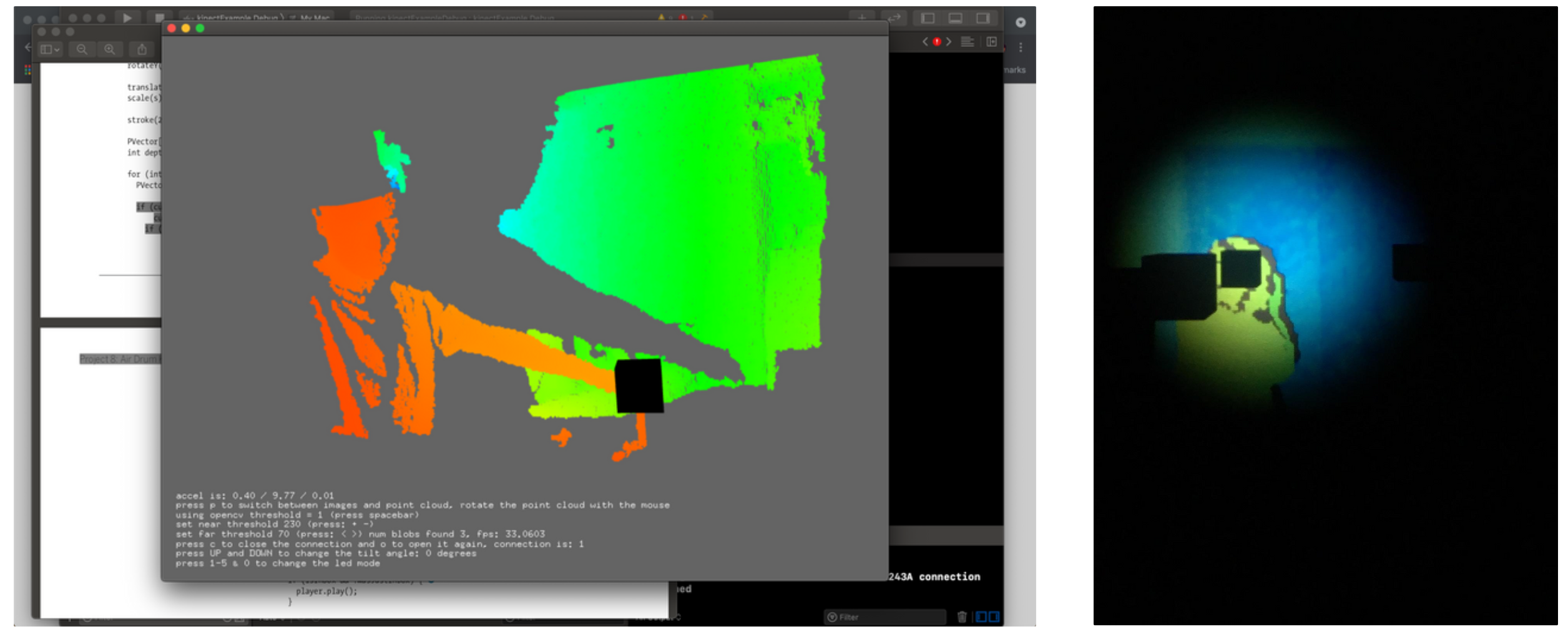

The space is monitored and mapped with sound using a Kinect depth camera and openFrameworks. In openFrameworks a point cloud is drawn based on the 3D elements viewed by the depth camera. I used Dan Buzzo’s code as a starting point in learning how to render point clouds with the Kinect. Boxes are rendered in the 3D space which will act as the sound spots/triggers. A box at the entry point triggers the app to start then each box represents a different numbers station.

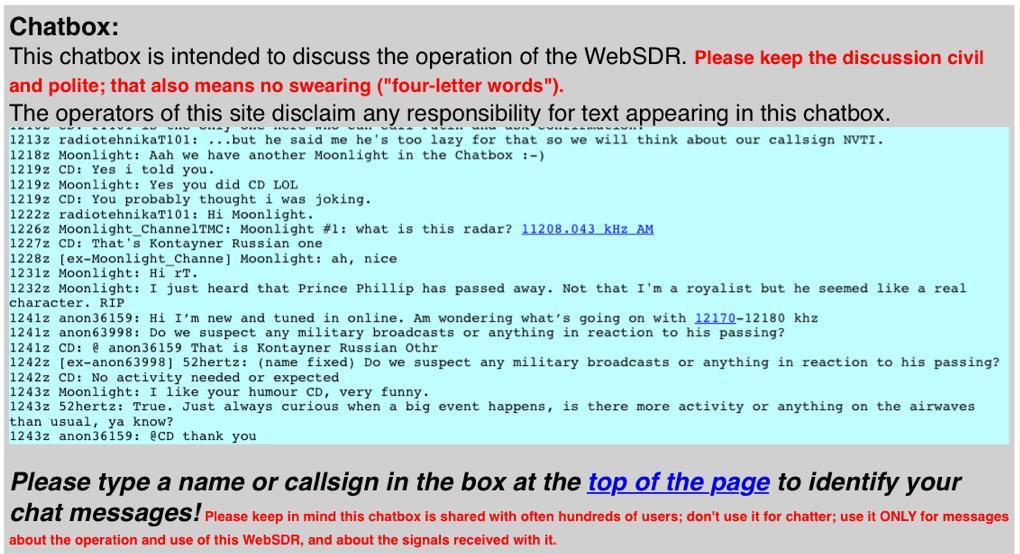

When an agent enters and exits the boxes a message is transmitted from openFrameworks to a native Node.js web app through open sound control. I adapted the air-drum processing code from Borenstein’s book, Making Things See. The app controls what is played and projected based on these messages. The app pulls live broadcasts from online software-defined radios (webSDR) and prerecorded transmissions.

Hardware

Kinect V1

Mac Book

Speakers

Projector

Screenshot from the webSDR chatbox the day after Prince Phillip’s death: